Microsoft recently announced the public preview of Azure Data Factory. The blurb makes it sound like cloud SSIS:

Compose data storage, movement, and processing services into streamlined data pipelines

Drive business decisions with trusted information. Tie your unstructured and structured data ecosystems together across on-premises and cloud sources using Hadoop processing. Monitor your pipelines and service health using the Azure portal. Consume trusted data with BI and analytics tools and applications.

http://azure.microsoft.com/en-us/services/data-factory/

But don’t be fooled!

Unfortunately in reality it’s a weird hack that requires a collection of hand written json scripts that hardcode the source,transform and destination metadata and need to be registered from your local machine using powershell.

The ADF documentation includes a simple example that copies the contents of a two column, two row CSV into an azure database table. http://azure.microsoft.com/en-us/documentation/articles/data-factory-get-started/

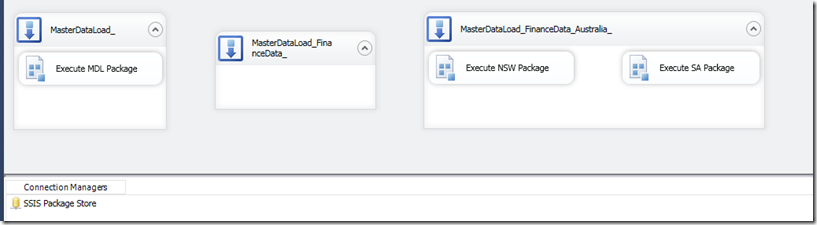

Setup tasks aside, I’ve shown the equivalent development objects for this side by side below (SSIS on the left, ADF on the right)

Recreating a simple CSV->DB table SSIS data flow requires 100ish lines of manually written json scripts (the azure data factory quickstart guide suggests using "notepad") split across multiple files and then locally executed azure powershell cmdlets to 'register' the json with the data factory.

Yes, that scripting needs to be freehanded. Yes, you need to manually upload the individual files to your azure blob storage. Yes, you need to manually execute azure powershell cmdlets locally to ‘register’ your uploaded json to the data factory. No, there is no pipeline/json authoring webapp in the ADF console that has templates, snippets, intellisense or some kind of syntax validation.

I don’t know whether I’m looking at this wrong, but moving even a trivial ETL workflow into this would be a significant effort. And debugging that json…………….. No thanks. I haven’t tried messing up the CSV or introducing a breaking change to the destination schema to see what kind of useful errors it spits out yet but scripting error messages tend to be fairly cryptic and I don’t see why this would be any different. the error messages for the trivial schema issues I introduced were pretty good actually…

The cost looks to be about a dollar a run for the above workflow (3 activities: read, copy, load) unless I’m reading it wrong (I think I am, as I’ve left the simple example experiment running indefinitely and it’s only showing as 0.58 activities). I might execute an SSIS project 20-50 times a day during development. A few (dozen) ADF dev runs to iron out some bugs might burn through the funds a bit too quickly.

I’m not sure what the purpose of this is. It sounds like there’s some cool stuff in there in regards to data monitoring and such, but not at the cost of basic ETL usability. Is it meant to be an ETL or (meta)data analysis tool for web devs, hence the reliance on json?

I can’t see myself using or recommending this in its current state for any kind of BI or data analytics ETL/ELT/ELTL work. It doesn’t do anything unique and the lack of a development gui for common tasks is a deal-breaker.

If you have a large volume of data in the cloud then it might be more worthwhile to spin up in the same affinity group as your blob storage a dedicated VM with SSIS installed and process it there. Use the new Azure Automation feature to spin this VM up and down on a schedule. A beefy VM will set you back a dollar a two an hour. And you can do your dev locally for free*.

This is a shame, and a bit of a shock really. I’ve been playing around in Azure Machine Learning, another azure preview offering, and the webapp gui in that is brilliant. It’s probably less buggy than the current SSDT 2012 SSIS gui! You can drag/drop to build experiments to train your models and publish them from within the gui.