The SQL Server engine offers numerous methods to perform minimally logged inserts into an empty clustered table (http://technet.microsoft.com/en-us/library/ms191244%28v=sql.105%29.aspx) if we meet the prerequisites (http://technet.microsoft.com/en-us/library/ms190422%28v=sql.105%29.aspx), but what about when using SSIS? There’s quite a few places online that mention that it’s supported as an aside but don’t give away too much info on what the prerequisites are.

Going from the prerequisites listed for the other bulk inserts you’d think that a a sorted insert into an OleDb destination with Table lock turned on, Rows per Batch and Maximum Insert Commit Size left to the defaults, and an ORDER hint on the clustered column(s) would be enough, but is it?

Usually, no. Inserts into empty clustered tables (B-trees) via SSIS are minimally logged if the following is true:

- The Bulk Insert task is used with a flat file sorted in clustered key order and the following options are set:

- Table lock: checked

- Batch size: 0

- SortedData has the clustered key order specified

only useful if your source is a ordered flat file. Not much use when moving data from another database table.

- The SQL Destination task is used with a source sorted in clustered key order and the following options are set:

- Table lock: checked

- Order columns has the clustered key order specified

only useful when the SSIS package is executed on the same server as the SQL instance.

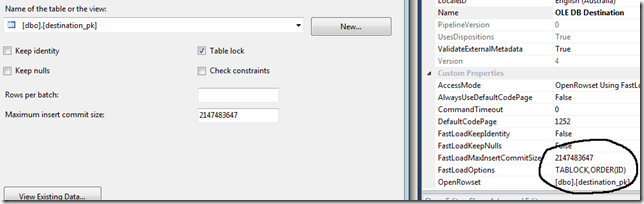

- The OleDb destination task is used with a source sorted in clustered key order and the following options are set:

- Table lock: checked

- Rows per batch: blank (default)

- Maximum insert commit size: 0

- FastLoadOptions has order hint added: TABLOCK,ORDER(<Clustered Columns,..>)

And there you have it! Keep reading if you’re interested in the “why” and an explanation of the warning message you’ll get when setting the insert commit size to 0, but all the important info is above.

The Investigation

First, we need some data.

create table dbo.source_data (id int primary key, data char(100) not null)

go

create table dbo.destination_pk (id int primary key, data char(100) not null)

go

insert into dbo.source_data (id,data)

select top 850000 row_number() over (order by sysdatetime()), 'blah blah' from master..spt_values a, master..spt_values b, master..spt_values c

go

sp_spaceused 'dbo.source_data'

go

The above code will generate about 95 meg of data. I’ve tried to stay under 100 meg for an important reason that’s related to the internal SSIS buffers (but more on that later).

Next, we need some way to see what’s happening in the logs. For that we can use the undocumented fn_dblog() function (http://www.confio.com/logicalread/sql-server-dbcc-log-command-details-and-example/).

-- Log records and record size

SELECT COUNT(*)AS numrecords,

CAST((COALESCE(SUM([Log Record LENGTH]), 0))

/ 1024. / 1024. AS NUMERIC(12, 2)) AS size_mb

FROM sys.fn_dblog(NULL, NULL) AS D

WHERE AllocUnitName = 'dbo.destination_pk' OR AllocUnitName LIKE 'dbo.destination_pk.%';

-- Breakdown of Log Record Types

SELECT Operation, Context,

AVG([Log Record LENGTH]) AS AvgLen, COUNT(*) AS Cnt

FROM sys.fn_dblog(NULL, NULL) AS D

WHERE AllocUnitName = 'dbo.destination_pk' OR AllocUnitName LIKE 'dbo.destination_pk.%'

GROUP BY Operation, Context, ROUND([Log Record LENGTH], -2)

ORDER BY AvgLen, Operation, Context;

(The above SQL for retrieving the details for these tests was ruthlessly stolen from: http://sqlmag.com/t-sql/minimally-logged-inserts)

Baseline

We need to perform a minimally logged insert into our destination table to have something to compare SSIS against. I exported our test data into a text file in native format using bcp (c:\> bcp "SELECT id,data from dbo.source_data order by id" queryout c:\myout.txt -T -S. -dtest -n)

I then imported it back using BULK INSERT

bulk insert test.dbo.destination_pk from 'c:\myout.txt'

with(

DATAFILETYPE = 'native'

,TABLOCK

,ORDER(ID))

The results from fn_dblog() are

| numrecords | size_mb | ||

| 4716 | 0.31 | ||

| Operation | Context | AvgLen | Cnt |

| LOP_SET_BITS | LCX_GAM | 60 | 1529 |

| LOP_SET_BITS | LCX_IAM | 60 | 1529 |

| LOP_FORMAT_PAGE | LCX_IAM | 84 | 1 |

| LOP_MODIFY_ROW | LCX_PFS | 88 | 1649 |

| LOP_MODIFY_ROW | LCX_IAM | 100 | 8 |

This tells us that there were 4176 log entries that take up 0.31 of a megabyte. Quite a bit less than the 850,000 rows and 95meg of data, so we can be fairly sure the insert was minimally logged.

SSIS Scenario 1 – Default Data Flow

A simple data flow like the one described in the opening paragraphs. A default package with a data flow OleDb source (select id,data from dbo.source_data order by id) into an OleDb destination with the following settings:

- Table lock: checked. Keep identity, Keep nulls and Check constraints unchecked

- Rows per batch: blank (default)

- Maximum insert commit size: 2147483647 (default)

- FastLoadOptions has order hint added: TABLOCK,ORDER(ID)

The data flow DefaultBufferMaxRows and DefaultBufferSize are left at the default of 10000 and 10485760 respectively.

The results from fn_dblog() are

| numrecords | size_mb | ||

| 963726 | 165.54 | ||

| Operation | Context | AvgLen | Cnt |

| LOP_DELETE_SPLIT | LCX_INDEX_INTERIOR | 60 | 43 |

| LOP_SET_BITS | LCX_GAM | 60 | 1530 |

| LOP_SET_BITS | LCX_IAM | 60 | 1530 |

| LOP_INSYSXACT | LCX_CLUSTERED | 62 | 47332 |

| LOP_INSYSXACT | LCX_INDEX_INTERIOR | 62 | 23928 |

| LOP_MODIFY_ROW | LCX_PFS | 81 | 13511 |

| LOP_FORMAT_PAGE | LCX_HEAP | 84 | 11833 |

| LOP_FORMAT_PAGE | LCX_IAM | 84 | 1 |

| LOP_FORMAT_PAGE | LCX_INDEX_INTERIOR | 84 | 44 |

| LOP_INSERT_ROWS | LCX_INDEX_INTERIOR | 84 | 11877 |

| LOP_MODIFY_HEADER | LCX_HEAP | 84 | 11833 |

| LOP_MODIFY_HEADER | LCX_INDEX_INTERIOR | 84 | 43 |

| LOP_MODIFY_ROW | LCX_IAM | 100 | 8 |

| LOP_INSERT_ROWS | LCX_CLUSTERED | 196 | 840170 |

| LOP_INSERT_ROWS | LCX_INDEX_INTERIOR | 5012 | 43 |

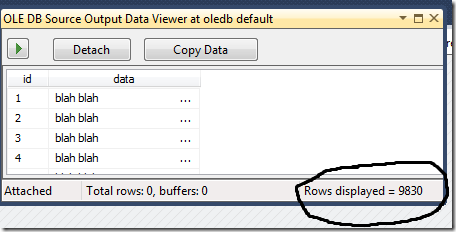

Not minimally logged, then? More log entries than we have rows, and over 50% more data. It’s also interesting that there are 840,170 individual inserts into the clustered index even though we had 850,000 rows. So that leaves 9,830 rows that appear to have been minimally logged. That looks suspiciously like the ‘average’ size we see for an SSIS buffer (you know how the rows in the dataflow seem to increase by approximately 10,0000 rows per refresh?). In fact if we turn on a data viewer we see that each full buffer contains 9,830 rows.

What happens when we increase the data flow buffers from the default 10,000 rows and 10 megabytes?

SSIS Scenario 2 – Maximum Data Flow Buffers

A simple data flow like the one described in scenario 1. A default package with a data flow OleDb source (select id,data from dbo.source_data order by id) into an OleDb destination with the following settings:

- Table lock: checked. Keep identity, Keep nulls and Check constraints unchecked

- Rows per batch: blank (default)

- Maximum insert commit size: 2147483647 (default)

- FastLoadOptions has order hint added: TABLOCK,ORDER(ID)

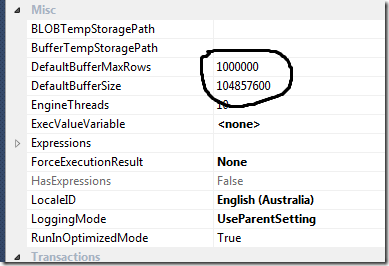

But this time we also change the DefaultBufferMaxRows and DefaultBufferSize to 1000000 and 104857600 respectively. 100 megabytes is a hard limit in SSIS for the buffer size (remember how our source data table size is just under 100 meg?)

The results from fn_dblog() are

| numrecords | size_mb | ||

| 4716 | 0.31 | ||

| Operation | Context | AvgLen | Cnt |

| LOP_SET_BITS | LCX_GAM | 60 | 1529 |

| LOP_SET_BITS | LCX_IAM | 60 | 1529 |

| LOP_FORMAT_PAGE | LCX_IAM | 84 | 1 |

| LOP_MODIFY_ROW | LCX_PFS | 88 | 1649 |

| LOP_MODIFY_ROW | LCX_IAM | 100 | 8 |

The same as our baseline! So does this mean that we can only minimally log the first 100 megabytes of a data flow? But more importantly why is this happening?

According to the OleDb Destination page on TechNet (http://msdn.microsoft.com/en-us/library/ms188439.aspx) we have the following two settings:

- Rows per batch

- Specify the number of rows in a batch. The default value of this property is –1, which indicates that no value has been assigned.

- Clear the text box in the OLE DB Destination Editor to indicate that you do not want to assign a custom value for this property.

- Maximum insert commit size

- Specify the batch size that the OLE DB destination tries to commit during fast load operations. The value of 0 indicates that all data is committed in a single batch after all rows have been processed.

- A value of 0 might cause the running package to stop responding if the OLE DB destination and another data flow component are updating the same source table. To prevent the package from stopping, set the Maximum insert commit size option to 2147483647.

- If you provide a value for this property, the destination commits rows in batches that are the smaller of (a) the Maximum insert commit size, or (b) the remaining rows in the buffer that is currently being processed.

The above description reads as if 0 and 2147483647 are interchangeable for the Maximum insert commit size, and as non-zero value will avoid the issue with locking it should be the one to use.

But a non-zero value for the Maximum insert size is treated by SSIS as “just another number” whether it’s the default 2147483647 or not, and then the last bolded point comes into play. A batch for our minimally logged insert scenario is the smallest of:

- Rows per batch. Blank means it’s ignored.

- Maximum insert commit size: 2147483647 rows

- DefaultBufferMaxRows: 10000 by default. Max of 2147483647

- DefaultBufferSize, 10485760 bytes by default. Max of 104857600

It’s the data flow properties in most cases that end up dictating the insert batch size and that’s what we saw in scenario 1. Batches are very important when inserting into an empty B-tree because only the first batch is minimally logged, after then you’re inserting into a non-empty B-tree and are fully logged. Only the first 10meg batch was minimally logged in scenario 1 and the remaining data inserted was fully logged. The buffer defaults and maximums are documented here http://msdn.microsoft.com/en-us/library/ms141031%28v=sql.105%29.aspx

What about when we set the Maximum insert commit size to 0, warnings be damned?

SSIS Scenario 3 – Maximum Inset Commit Size Set to 0

A simple data flow like the one described in the above. A default package with a data flow OleDb source (select id,data from dbo.source_data order by id) into an OleDb destination with the following settings:

- Table lock: checked. Keep identity, Keep nulls and Check constraints unchecked

- Rows per batch: blank (default)

- Maximum insert commit size: 0

- FastLoadOptions has order hint added: TABLOCK,ORDER(ID)

The data flow DefaultBufferMaxRows and DefaultBufferSize are left at the default of 10000 and 10485760 respectively.

he results from fn_dblog() are

| numrecords | size_mb | ||

| 4716 | 0.31 | ||

| Operation | Context | AvgLen | Cnt |

| LOP_SET_BITS | LCX_GAM | 60 | 1529 |

| LOP_SET_BITS | LCX_IAM | 60 | 1529 |

| LOP_FORMAT_PAGE | LCX_IAM | 84 | 1 |

| LOP_MODIFY_ROW | LCX_PFS | 88 | 1649 |

| LOP_MODIFY_ROW | LCX_IAM | 100 | 8 |

The same as our baseline and therefore minimally logged. The benefit of this setup is that it applies to any volume of data, whereas scenario 2 only helps with data volumes below 100meg. Moving less than 100 meg is a few second job fully logged or unlogged so leaving the Maximum insert commit size at the default and maxing out the DefaultBufferSize is kind of useless. But what about the informational warning we get from the OleDb destination about the package potentially not responding?

[OLE DB Destination [2]] Information: The Maximum insert commit size property of the OLE DB destination "OLE DB Destination" is set to 0. This property setting can cause the running package to stop responding. For more information, see the F1 Help topic for OLE DB Destination Editor (Connection Manager Page).

The help menu (and the excerpt from TechNet pasted in the SSIS Scenario 2 section above) states: A value of 0 might cause the running package to stop responding if the OLE DB destination and another data flow component are updating the same source table. To prevent the package from stopping, set the Maximum insert commit size option to 2147483647.

It only applies when multiple processes are inserting into the same table. We aren’t trying to have multiple processes insert data into our destination table concurrently, in fact we have a table lock enabled which would prevent this anyway. So this warning even doesn’t apply to minimally logged insert scenarios.

Epilogue – Trace Flag 610

Trace flag 610 allows minimally logged inserts into non-empty indexed tables, and turning it on actually does greatly reduce the log activity in scenario 1 (http://technet.microsoft.com/en-us/library/dd425070(v=sql.100).aspx).

| numrecords | size_mb | ||

| 67144 | 5.68 | ||

| Operation | Context | AvgLen | Cnt |

| LOP_DELETE_SPLIT | LCX_INDEX_INTERIOR | 60 | 37 |

| LOP_INSYSXACT | LCX_CLUSTERED | 62 | 284 |

| LOP_INSYSXACT | LCX_INDEX_INTERIOR | 62 | 364 |

| LOP_SET_BITS | LCX_GAM | 62 | 2234 |

| LOP_SET_BITS | LCX_IAM | 62 | 2234 |

| LOP_MODIFY_HEADER | LCX_BULK_OPERATION_PAGE | 76 | 9793 |

| LOP_FORMAT_PAGE | LCX_BULK_OPERATION_PAGE | 84 | 14160 |

| LOP_FORMAT_PAGE | LCX_INDEX_INTERIOR | 84 | 37 |

| LOP_INSERT_ROWS | LCX_INDEX_INTERIOR | 84 | 9793 |

| LOP_MODIFY_HEADER | LCX_HEAP | 84 | 19512 |

| LOP_MODIFY_HEADER | LCX_INDEX_INTERIOR | 84 | 37 |

| LOP_MODIFY_ROW | LCX_PFS | 90 | 6137 |

| LOP_INSERT_ROWS | LCX_CLUSTERED | 196 | 2485 |

| LOP_INSERT_ROWS | LCX_INDEX_INTERIOR | 5012 | 37 |

But just changing the Maximum insert commit size to 0 will result in a true minimally logged insert, so definitely go with that if you want a minimally logged insert into an empty indexed table.

Trace flag 610 is certainly something to look into after the initial load/migration of say large fact tables as it will usually result in a performance increase in subsequent incremental loads.

Good and detailed explanation. thanks

ReplyDeleteThis comment has been removed by the author.

ReplyDeleteThis comment has been removed by the author.

ReplyDelete